Microsoft Launches Proprietary AI Processor to Drive Future Model Innovation

Maia 200: Pioneering Advanced AI Inference Capabilities

Microsoft has deployed its newly developed custom AI chip, the Maia 200, within one of its data centers, marking a pivotal advancement in the company’s AI infrastructure. Plans are underway to roll out this technology across multiple facilities to bolster computational efficiency.

Designed explicitly for AI inference, the Maia 200 excels at handling intricate calculations essential for production-grade artificial intelligence models. Microsoft asserts that this chip delivers superior processing speeds and enhanced performance compared to competitors like Amazon’s Trainium processors and Google’s latest Tensor Processing Units (TPUs).

The Shift Toward Proprietary AI Hardware Amid Supply Challenges

The persistent global shortage of high-performance GPUs-dominated by Nvidia-has prompted leading cloud providers such as Microsoft to develop their own specialized chips. This strategic pivot aims to reduce dependency on external suppliers while addressing escalating demand for powerful AI compute resources.

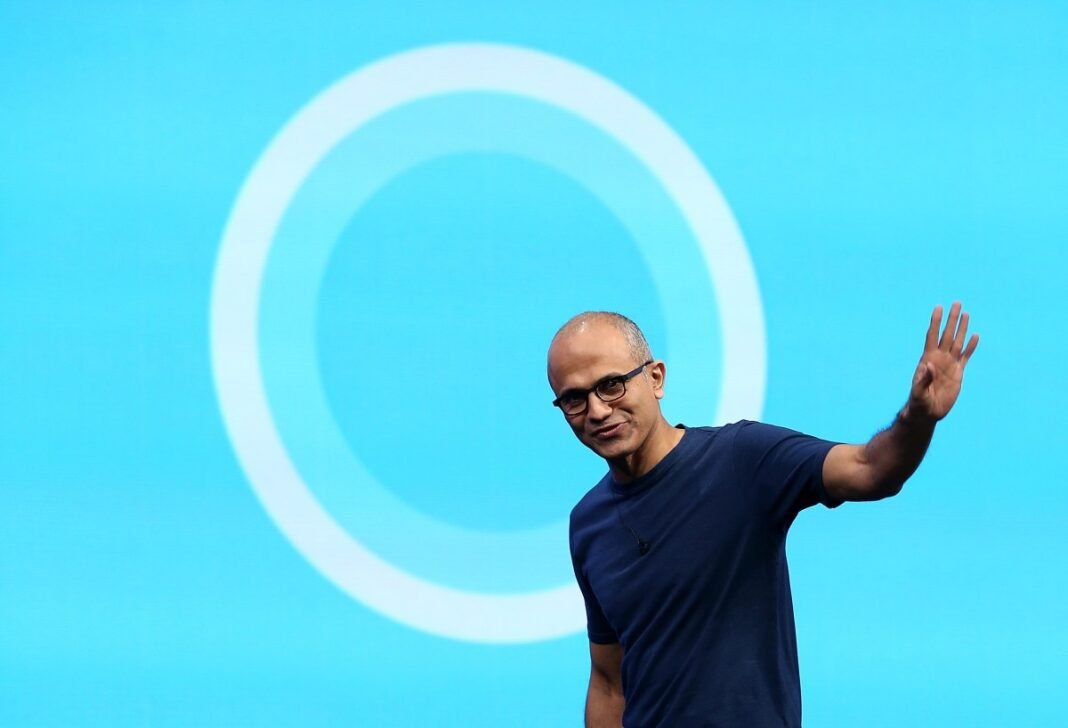

Despite advancing internal hardware capabilities, Microsoft CEO Satya Nadella emphasized the importance of maintaining strong partnerships with established manufacturers like Nvidia and AMD. He highlighted that innovation is an ongoing race: “Leading today does not guarantee dominance tomorrow.”

A Balanced Strategy: Vertical Integration Coupled with Collaborative Innovation

Nadella clarified that Microsoft’s approach involves vertical integration-from silicon design through software optimization-without excluding third-party technologies. This hybrid model enables leveraging diverse innovations while tailoring proprietary solutions optimized for specific workloads.

The Maia 200’s Role in Empowering Microsoft’s Superintelligence Team

The initial deployment of Maia 200 targets Microsoft’s elite Superintelligence team, which focuses on developing next-generation frontier AI models aimed at reducing reliance on external providers such as OpenAI and Anthropic. Mustafa Suleyman, former Google DeepMind co-founder and current head of this group, announced their exclusive early access as a meaningful milestone accelerating research progress.

Enhancing azure Cloud Services and Supporting OpenAI Models

Apart from internal applications, Maia 200 will boost performance for OpenAI models hosted on Microsoft Azure’s cloud platform. Though,securing sufficient quantities of cutting-edge AI hardware remains a widespread challenge amid surging demand from both commercial clients and internal teams alike.

“This launch marks a major step forward,” Suleyman stated during the rollout event, expressing confidence that bespoke hardware designed specifically for inference tasks will accelerate development timelines for advanced models.

The Rising Meaning of custom Chips in meeting Exploding AI Compute Needs

- Industry Landscape: Global investment in artificial intelligence infrastructure is expected to surpass $150 billion by 2026, intensifying competition among cloud leaders striving for greater control over computing power.

- Diverse Use Cases: From powering real-time multilingual virtual assistants used worldwide to enabling ultra-responsive autonomous vehicle navigation systems customized inference accelerators like Maia 200 have become critical components within modern technology ecosystems.

- A Financial Sector Example: Several banks implementing fraud detection algorithms reported latency reductions up to 40% after transitioning from generic GPUs toward specialized inference chips similar in concept to Microsoft’s Maia 200 initiative.

A Forward Look: The Future Trajectory of Tailored Silicon Solutions

This development reflects an industry-wide trend where top tech companies increasingly invest not only in proprietary software but also custom-designed silicon crafted specifically around evolving machine learning demands-a movement poised to accelerate alongside expanding generative AI applications globally.