Escalating Disputes Over AI Safety: Silicon Valley Faces Off with Advocates

Clash Between Silicon Valley Leaders and AI Safety Campaigners

Recently, influential Silicon Valley personalities, including White House AI & crypto Czar David Sacks and OpenAI’s Chief Strategy Officer Jason Kwon, ignited debate by criticizing groups advocating for AI safety. They accused some of thes advocates of prioritizing personal or financial interests tied to wealthy investors rather than genuinely focusing on public good.

background: Patterns of Resistance to AI Safety Initiatives

This tension is part of a recurring trend where Silicon Valley has attempted to discredit and intimidate critics.Earlier in 2024, misinformation spread within venture capital circles claiming California’s proposed AI safety bill, SB 1047, would criminalize startup founders. Experts quickly debunked these rumors; however, Governor Gavin Newsom ultimately vetoed the legislation.

Nonprofit Leaders’ Concerns Over Backlash

The recent remarks from Sacks and OpenAI have unsettled many nonprofit leaders working on AI safety issues. Several have chosen to speak anonymously due to fears that their organizations might face retaliation or funding challenges as an inevitable result.

Balancing Ethical AI Development with Market Growth Pressures

The core dispute reflects a broader industry dilemma: how to responsibly develop AI technologies while rapidly scaling products for widespread adoption. This conflict was recently dissected on a leading technology podcast that also examined California’s new chatbot regulations alongside OpenAI’s evolving policies on sensitive content moderation.

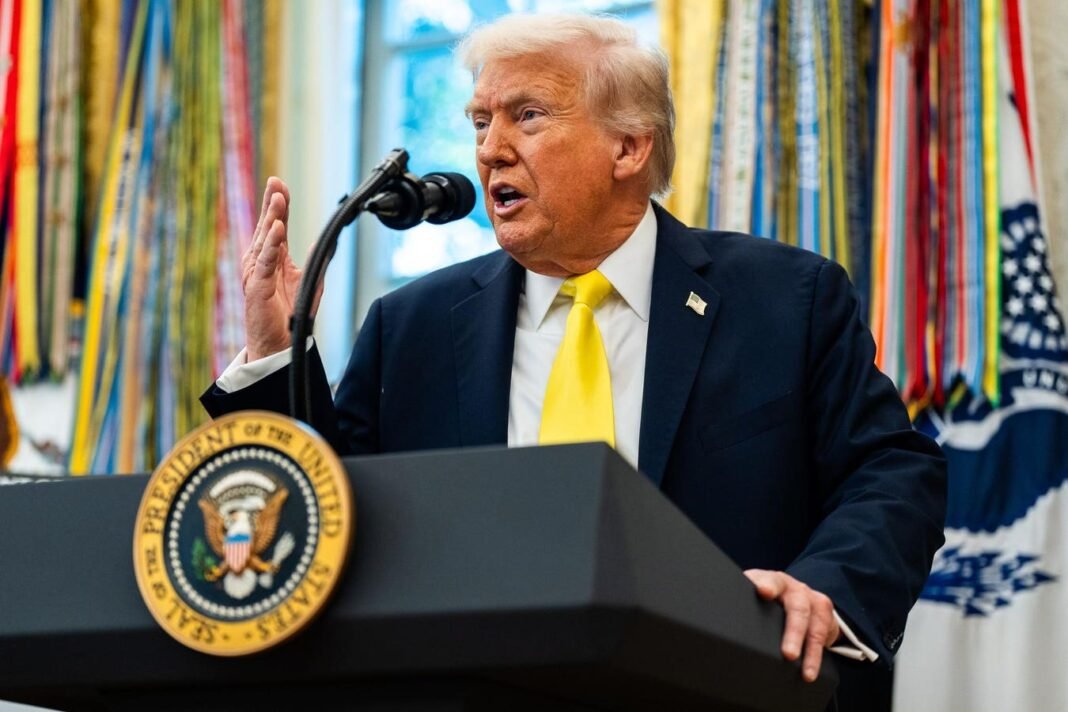

David Sacks’ Accusations Against Anthropic’s Regulatory Influence

On social media platform X, David Sacks criticized Anthropic-a company vocal about AI risks such as job losses and cybersecurity threats-for allegedly using fear-based tactics to promote legislation that advantages itself while imposing heavy compliance burdens on smaller startups. Anthropic notably backed California Senate Bill 53 (SB 53), which requires large AI companies to submit detailed safety reports and was enacted last month.

“Anthropic is orchestrating a sophisticated regulatory capture strategy fueled by fear-mongering.It bears primary obligation for the wave of state regulations harming the startup ecosystem.” – David Sacks

Sacks also contended that Anthropic’s confrontational stance toward federal authorities undermines its own regulatory agenda by alienating previous administrations.

OpenAI’s Legal Measures targeting Critical Nonprofits

Simultaneously, OpenAI’s Jason Kwon explained the rationale behind issuing subpoenas against several AI safety nonprofits such as Encode. These organizations publicly opposed OpenAI’s restructuring following Elon Musk’s lawsuit alleging deviation from its nonprofit mission; Encode supported Musk through an amicus brief.

“There’s much more beneath the surface… We are actively defending ourselves against Elon Musk’s lawsuit aimed at harming OpenAI for his own financial gain.” – Jason Kwon

kwon raised concerns about the openness of these nonprofits’ funding sources and potential coordination with external parties.

Details on Subpoenas and Industry Reactions

Reports reveal OpenAI subpoenaed communications from Encode and six other nonprofits regarding their interactions with Elon Musk and Meta CEO Mark Zuckerberg as well as their support for SB 53. This legal action has heightened anxiety within the AI safety community about efforts to suppress dissenting voices.

Tensions Within OpenAI Between Policy and Research Divisions

An insider disclosed growing friction inside OpenAI between its policy team-which opposed SB 53 favoring uniform federal regulations-and its research division that openly publishes findings highlighting AI risks.

Joshua Achiam, head of mission alignment at OpenAI, voiced unease over the subpoenas: “At potential risk to my career I must say: this doesn’t feel right.”

Views from Other Leaders in the AI Safety Field

Brendan Steinhauser,CEO of Alliance for Secure AI (not subpoenaed),suggested OpenAI perceives critics as pawns in a Musk-led conspiracy but acknowledged many in the safety community remain critical of Elon Musk’s xAI safety practices.

Steinhauser commented: “OpenAI’s actions seem designed to intimidate critics and deter other nonprofits from speaking out. Meanwhile, figures like Sacks appear concerned about increasing accountability demands placed on tech companies.”

Encouraging Practical Engagement with Everyday Users

Sriram Krishnan, senior White House policy advisor on AI and former venture capitalist, urged safety advocates to connect more closely with real-world users and organizations implementing AI technologies instead of remaining detached from practical challenges.

Public Attitudes Toward AI Risks and Opportunities

A recent Pew Research Center survey found nearly half of Americans feel more worried than hopeful about the impact of AI. Additional studies show voters prioritize concerns such as job displacement and misinformation over existential threats often emphasized by some safety advocates.

Navigating the Fine Line Between Innovation and Regulation

The surge in AI investment continues driving significant economic growth across the U.S.,yet it also raises fears that overly stringent regulations could stifle innovation. Striking the right balance remains a key challenge for policymakers and industry leaders amid rapid technological evolution.

The Growing Momentum Behind the AI Safety Movement

Despite resistance from parts of Silicon Valley,support for the AI safety movement is gaining strength as we approach 2026. The pushback from industry insiders may indicate that advocacy efforts are increasingly influencing policy discussions and shaping future regulatory frameworks.