Memory’s Growing Impact on AI infrastructure Expenditure

While Nvidia GPUs often dominate conversations about AI infrastructure spending, the meaning of memory components is rapidly intensifying. As hyperscale cloud operators prepare to allocate tens of billions toward expanding data center capacity, the price of DRAM modules has skyrocketed-climbing nearly sevenfold within just one year.

The crucial Role of Memory Optimization in enhancing AI Efficiency

Beyond mere hardware investment, mastering memory management is becoming a cornerstone for maximizing AI system performance. Delivering relevant data to AI models at precisely the right moment can dramatically cut down token consumption during inference requests.This kind of optimization proves essential for enterprises aiming to sustain profitability and maintain an edge in a fiercely competitive market.

Expert Perspectives on Memory’s Influence in AI Systems

Industry veterans specializing in semiconductors emphasize that memory chips shape not only physical hardware design but also software-level efficiency within artificial intelligence frameworks. Their insights reveal how innovative caching mechanisms are reshaping operational expenses and boosting overall throughput.

A compelling illustration comes from Anthropic’s evolving prompt caching strategy: what started as a simple pricing scheme has transformed into a multi-tiered model offering cache durations ranging from 5 minutes up to an hour,each with distinct costs associated with cache reads and writes. this nuanced approach reflects strategic efforts to optimize cached data handling effectively.

The Financial Dynamics behind Prompt Cache Durations

The retention period for cached prompts-such as those used by models like Claude-directly impacts cost-effectiveness. Extending cache lifetimes reduces repeated data fetches and lowers expenses; however, introducing new information risks evicting existing cached entries, creating trade-offs that demand careful orchestration.

Memory coordination as a Competitive Advantage in Large-Scale AI deployments

This delicate equilibrium highlights why organizations that excel at managing memory resources will lead future large-scale artificial intelligence initiatives. By refining token usage through intelligent caching policies, these leaders can unlock significant operational efficiencies and cost savings.

Pioneering Advances Fueling Cache Performance Improvements

An emerging example includes startups such as Tensormesh focusing on optimizing cache utilization within server environments-a critical layer enabling greater inference throughput without proportionally increasing infrastructure costs or energy consumption.

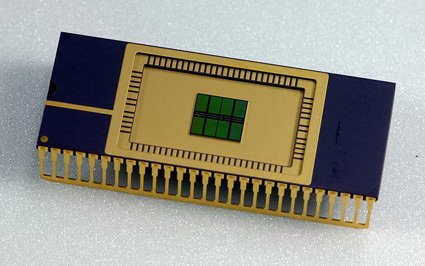

Diverse Memory Technologies Shaping Data Center Architectures

At foundational levels, choices between DRAM and High Bandwidth Memory (HBM) substantially influence system speed and power efficiency. Concurrently,higher architectural layers experiment with distributed model designs leveraging shared caches across multiple instances to maximize resource utilization effectively.

Toward more Cost-Effective and Scalable Artificial Intelligence Solutions

- Minimized Token Consumption: Enhanced memory strategies directly reduce tokens needed per query, substantially cutting inference expenditures.

- Evolving Model Processing: Continuous improvements enable models to handle tokens faster while lowering computational costs compared to previous generations.

- Enduring Profit Margins: As server-related overheads decline due to smarter memory use,many applications once considered financially unfeasible may soon become commercially viable ventures.

A Practical Analogy: How Streaming platforms Optimize Content Caching

This situation parallels how video streaming services manage content delivery networks (CDNs). By proactively caching popular videos closer to viewers during peak hours-and sometimes preloading based on predictive analytics-they reduce bandwidth demands while enhancing user experience. Similarly, effective prompt caching minimizes redundant computations while accelerating response times within AI systems operating at scale.