Decoding the Controversy Surrounding AI Web Crawling and Site Accessibility

AI Agents and Website Access: A Complex Debate

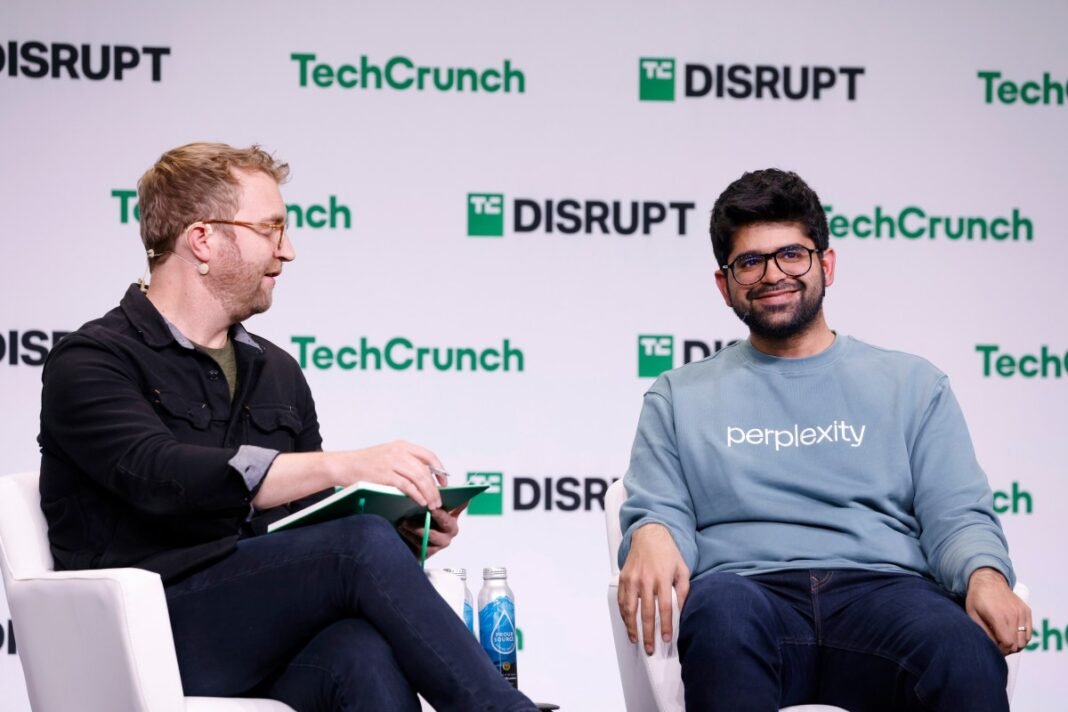

The recent dispute involving Cloudflare’s allegations against the AI-powered search engine Perplexity for accessing websites that explicitly forbid such activity has ignited a multifaceted conversation. This issue extends beyond a simple case of an AI crawler violating rules; it raises fundamental questions about how artificial intelligence tools should engage with online content, especially when site owners have clearly defined restrictions.

As AI-driven assistants become increasingly integrated into everyday internet use, a critical question emerges: Should an AI agent retrieving details on behalf of its user be treated like an automated bot or regarded as equivalent to a human visitor? This debate grows more urgent as millions turn to these technologies for real-time data gathering.

Cloudflare’s Investigation Into Perplexity’s Methods

Cloudflare, which safeguards millions of websites from malicious bots, conducted an experiment by launching a new website devoid of any prior bot traffic history. They implemented a robots.txt file specifically designed to block Perplexity’s known crawlers. Despite these precautions, Perplexity was still able to access and provide answers based on this site’s content.

The investigation uncovered that when blocked at the crawler level, Perplexity switched tactics by employing what appeared to be “a generic browser mimicking Google Chrome on macOS” to bypass restrictions.Cloudflare’s CEO condemned this approach as deceptive behavior akin to hacking techniques warranting public scrutiny and stringent countermeasures.

Diverse Perspectives: Is This Behavior Truly Malicious?

This harsh assessment sparked significant debate within the tech community. many argued that if an individual uses their browser to visit a website directly, then having an AI assistant perform similar actions on their behalf should not be deemed inherently unethical or unlawful.

“If I personally browse using Firefox or Chrome, why should my request through an LLM-powered assistant be treated differently?” questioned one participant in online discussions.

A representative from Perplexity initially denied responsibility for these bots and dismissed Cloudflare’s accusations as self-promotional rhetoric.Subsequent clarifications revealed some activity originated from third-party services intermittently utilized by Perplexity rather then its core infrastructure.

User-Initiated Access Versus Automated Scraping: Understanding The Difference

Perplexity emphasized that this issue is not merely technical but also concerns control over open web data access.They contended current security frameworks like those used by Cloudflare struggle to differentiate between legitimate user-driven requests made via intelligent assistants and harmful automated scraping attempts designed solely for data harvesting without consent.

A Look at Industry Practices and Standards Compliance

An significant contrast highlighted by Cloudflare involved OpenAI’s approach compared with what they observed from Perplexity. OpenAI reportedly adheres strictly to robots.txt directives without resorting to evasive methods while supporting emerging protocols such as web Bot Auth. This cryptographic verification system-endorsed by Internet Engineering Task Force (IETF)-aims at enhancing transparency around automated web traffic legitimacy across platforms worldwide.

The Expanding Role of Bots in Modern Internet Traffic Patterns

Bots now dominate global internet traffic dynamics more than ever before. Recent cybersecurity analyses reveal:

- Bots constitute approximately 55% of all internet traffic globally;

- Around 40% of this volume involves malicious bots conducting unauthorized scraping or credential stuffing attacks;

- This surge presents acute challenges notably for small businesses lacking elegant defense mechanisms;

- The majority share of benign bot activity stems from large language models (LLMs) powering digital assistants across various devices and platforms worldwide.

The Shift in Web Engagement Driven By Conversational Agents

This evolving landscape disrupts traditional assumptions where verified crawlers like bingbot or Googlebot were welcomed because indexing translated into valuable commercial visitors ready for engagement. However, Gartner forecasts predict up to a 30% decline in conventional search queries within three years due largely to increased reliance on conversational agents delivering direct answers instead of links-a conversion reshaping SEO strategies profoundly across industries today.

Navigating Tomorrow: Will Websites Block Or Welcome autonomous Browsers?

If consumers increasingly delegate tasks such as booking flights or purchasing products through autonomous agents powered by llms-as many experts anticipate-the question arises whether websites will gain financially or suffer losses if they block these intermediaries’ access entirely.

This tension is reflected vividly among developers and users alike:

“I want my assistant-like perplexity-to freely explore any public content I inquire about,” one user insisted.

“Conversely,” others argue “site owners rely heavily on direct visits since advertising revenue depends fundamentally on actual page views.”

this core conflict suggests “agentic browsing,” where AIs autonomously navigate sites independently, presents far greater complexity than initially assumed as most publishers may opt for outright blocks rather than risk losing control over how their content is distributed online-and ultimately monetized.