Transforming Computational Theory: Unlocking the Hidden Potential of Memory Over Time

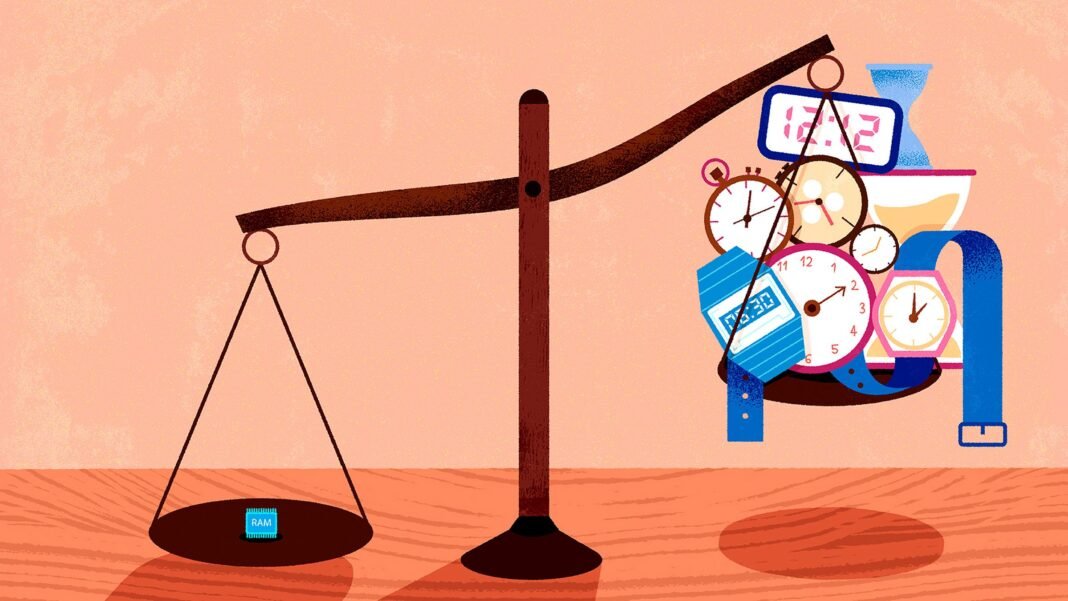

In computer science, two basic resources dictate how efficiently algorithms perform: time and memory (also referred to as space). Every computational task demands a certain execution duration and a specific amount of memory to hold data during processing. For decades, it was widely accepted that significantly reducing memory consumption without drastically increasing runtime was unattainable for many problems. Yet, recent developments have overturned this long-held belief.

Reimagining the Balance Between Time and space

The intricate relationship between temporal and spatial resources has been pivotal in understanding computational limits. Traditionally, algorithms required space roughly proportional to their running times, leading experts to believe that no universal technique could compress memory usage substantially without incurring prohibitive slowdowns.

A groundbreaking approach now reveals that any algorithm can be restructured to use dramatically less space-down close to the square root of its original runtime-tho at the cost of longer execution times. While this trade-off may not immediately benefit everyday applications due to slower speeds, it fundamentally reshapes our theoretical grasp on resource optimization in computation.

Ancient Milestones in complexity Theory

The formal study of computational resources began with Juris Hartmanis and Richard Stearns in the 1960s when they introduced precise metrics for measuring time and space within computations. These definitions paved the way for complexity classes such as P, encompassing problems solvable efficiently (in polynomial time), and PSPACE, which includes those solvable using reasonable amounts of memory regardless of time constraints.

A central open question has been weather these classes coincide or if PSPACE strictly contains P-that is, whether some problems inherently require more than just fast computation but also substantial memory beyond what rapid algorithms utilize.

Pioneering Universal Simulation Techniques

In 1975, John Hopcroft, Wolfgang Paul, and Leslie Valiant introduced a universal simulation method capable of converting any algorithm into one using slightly less space than its original runtime budget allowed.This breakthrough established an early connection between temporal complexity and spatial requirements by showing “anything achievable within certain time bounds can also be done with somewhat reduced space.”

A Half-Century Barrier Surpassed by Novel Insights

Despite initial excitement following Hopcroft-Paul-Valiant’s work, progress plateaued due to inherent limitations under natural assumptions about exclusive data storage locations during computation steps. For nearly fifty years thereafter, theorists believed no significant improvement over their simulation method was feasible-until recent advances shattered this ceiling through innovative techniques inspired by fresh perspectives on limited-space computations.

The Catalyst: Flexible Data Storage Inspired by “squishy Pebbles” Concept

This breakthrough traces back to an inventive strategy developed shortly before 2024 by James Cook (son of Stephen Cook) alongside Ian Mertz tackling complex hierarchical evaluation tasks known as “tree evaluation.” Their approach employed flexible data depiction methods metaphorically described as “squishy pebbles,” allowing overlapping storage rather than rigidly separated bits traditionally assumed necessary for minimal-memory computations.

“We discovered that bits don’t need to behave like indivisible pebbles occupying distinct spots; instead they can partially overlap or share representation,” explained Paul Beame from University of Washington.

This insight unlocked possibilities previously deemed unfeasible regarding ultra-low-space algorithm design.”

expanding Squishy Pebble Techniques into Universal Simulations

This conceptual leap inspired Ryan Williams when students presented Cook-Mertz’s findings during his MIT lectures early in 2024. Recognizing broader applicability beyond niche problems like tree evaluation sparked his idea: why not integrate squishy pebble methods into universal simulations? This fusion enabled far greater workspace reductions across all algorithms compared with earlier approaches dating back nearly half a century!

Theoretical breakthroughs Quantify Time-Space Trade-offs More Precisely

Williams’ refined simulation rigorously demonstrates a measurable quantitative gap between what limited-memory algorithms achieve versus those constrained primarily by speed alone-a crucial step toward resolving whether PSPACE truly surpasses P comprehensively.

This means some computational challenges inherently demand longer processing times if severely restricted on available workspace-and vice versa-but now we understand exactly how much small increments in memory enhance overall performance potential better than ever before quantified.

- Encouraging Outcome: Algorithms operating under strict spatial limits still solve all problems manageable within larger temporal bounds via new simulations dramatically reducing required workspace;

- Cautionary Note: Certain tasks cannot be accelerated unless additional temporal resources are allocated beyond corresponding spatial constraints;

Toward Resolving the P Versus PSPACE Conundrum

This nuanced advancement does not yet definitively prove whether every problem solvable quickly is also solvable using little memory-or vice versa-but equips researchers with powerful tools perhaps enabling exponential expansions upon these foundations.

If iterative amplification strategies akin to repeatedly extending leverage widen gaps further between resource classes over multiple rounds-as hypothesized-the ultimate separation might finally become provable after decades-long stalemate.”

Nurturing Curiosity Amidst expansive Beginnings

born amid Alabama’s vast rural landscapes where physical openness contrasted sharply with scarce computing opportunities initially shaped Ryan Williams’ interest with computers starting from childhood experiences involving simple digital light displays generating unpredictable patterns-igniting lifelong intrigue about randomness intertwined with structure inside machines performing invisible yet omnipresent calculations powering billions worldwide today (with approximately 6 billion smartphone users globally according latest estimates) through cloud infrastructures managing petabytes daily amid exponentially growing internet traffic volumes year-over-year).

Cultivating Passion Through Academic Rigor

< p > Despite facing early academic challenges emphasizing mathematical precision over intuition during undergraduate studies at Cornell University-a historic hub closely linked with pioneers defining complexity theory-persistent effort paid off through mentorship rooted deeply in foundational work . this solid grounding empowered later contributions including landmark results addressing famous questions around hard problem classifications , culminating recently when prospect aligned perfectly enabling breakthroughs tackling longstanding puzzles surrounding interplay between computation ‘ s moast precious commodities : < strong >timeandspace. < h1 > Charting Future Directions In Computational Complexity

< p > While Ryan Williams ‘ s proof represents arguably one of the most significant leaps forward as mid-1970s , numerous mysteries remain unresolved . The path ahead involves both technical refinement – enhancing simulations possibly via iterative amplification techniques -and conceptual interpretation : applying insights across diverse fields ranging from cryptography securing trillions annually online transactions worldwide , artificial intelligence optimizing massive datasets requiring balanced resource management ,to quantum computing promising fundamentally novel paradigms altogether . Researchers remain cautiously optimistic acknowledging lessons learned emphasize patience as essential virtue along journey unraveling nature ‘ s deepest secrets encoded inside abstract machines we call computers .

“Breakthroughs often emerge unexpectedly-not because they were directly sought but because curiosity led us down overlooked paths,” reflected an expert familiar with ongoing research efforts.

“Williams’ achievement exemplifies how perseverance combined with fresh perspectives revitalizes fields once considered stagnant.”