How cutting-Edge AI Models Acquire an Intuitive Grasp of the physical Habitat

from Early Human Curiosity to Machine Perception

Imagine observing a toddler: place a cup of juice on a table, then conceal it behind a curtain. Manny infants around six months old show surprise if the curtain moves as though the cup vanished, while by their first birthday, nearly all children understand that objects continue to exist even when out of sight. Fascinatingly, some advanced artificial intelligence models are beginning to demonstrate comparable intuitive reasoning about physical reality.

Learning physical dynamics Through Visual Data alone

A novel AI framework named video Joint Embedding Predictive architecture (V-JEPA),developed by Meta,acquires knowledge about its surroundings exclusively from video footage. Without any preprogrammed rules regarding physics or object behavior, this system gradually learns how entities move and interact over time and space.

This contrasts sharply with traditional AI approaches that analyze videos at the pixel level. Pixel-based methods treat every pixel uniformly but frequently enough get sidetracked by irrelevant visual noise such as flickering shadows or waving tree branches-overlooking critical details like traffic signals or pedestrian movements in complex urban scenes.

The Pitfalls of Pixel-Centric video analysis

Consider an autonomous car navigating through bustling city streets filled with cyclists, vehicles, and traffic signs. A model focused solely on pixels might expend computational effort tracking insignificant motions like fluttering leaves instead of prioritizing essential cues for safe driving decisions. This limitation has long impeded dependable scene interpretation in computer vision systems.

Embracing Abstract Representations for Deeper Understanding

The V-JEPA model circumvents these challenges by operating on latent representations-compressed abstractions capturing key features such as object shape and position while filtering out extraneous visual clutter.

An analogy can be drawn to how architects create blueprints: rather than depicting every brick or texture detail, they outline basic structures like walls and doorways that convey essential spatial information. Similarly, V-JEPA’s encoder networks convert complex video frames into concise numerical summaries encoding vital properties; decoders reconstruct images from these summaries when necessary.

The Mechanism Behind V-JEPA’s Learning Process

- Masked Frame Encoding: Portions of multiple video frames are deliberately obscured before being processed by one encoder (encoder 1), which generates latent codes representing incomplete inputs.

- Complete Frame Encoding: The unmasked frames pass through another encoder (encoder 2) producing corresponding latent codes without missing data.

- Predictive Modeling: A predictor network is trained to estimate encoder 2’s outputs based solely on the masked-frame latents from encoder 1-effectively forecasting unseen content at an abstract level rather than guessing individual pixels directly.

This strategy allows V-JEPA to concentrate computational resources on meaningful elements-for example identifying moving pedestrians instead of swaying foliage-and efficiently discard irrelevant distractions during both training and inference stages.

Tackling Real-World physics With Remarkable Precision

The robustness of this approach was validated using tests designed to measure intuitive physical reasoning in machines. On benchmarks evaluating concepts such as object permanence-the understanding that hidden objects still exist-and physical plausibility including gravity effects or natural collisions within scenes, V-JEPA achieved accuracy rates approaching 98%, vastly outperforming older pixel-focused models which hovered near chance levels.

mimicking Human Surprise Responses Through Prediction Errors

A compelling feature is how V-JEPA exhibits “surprise” when confronted with unexpected events violating learned physical laws-as an example if a ball disappears behind an obstacle but fails to reappear later as anticipated. By quantifying discrepancies between predicted future frames and actual observations mathematically, researchers observed spikes precisely aligned with physically implausible occurrences-a reaction reminiscent of infant cognitive surprise during similar occlusion experiments involving hidden objects.

The Future Landscape: Enhancements Driving Robotics Innovation

The latest version released in early 2024 contains over one billion parameters trained across more than 22 million diverse videos worldwide-a scale enabling richer environmental understanding yet constrained by short-term memory spans roughly equivalent to processing several seconds’ worth per input sequence.

- This limitation means longer sequences tend not be retained effectively over time-a constraint humorously compared by researchers not only to infants but also “goldfish memory.”

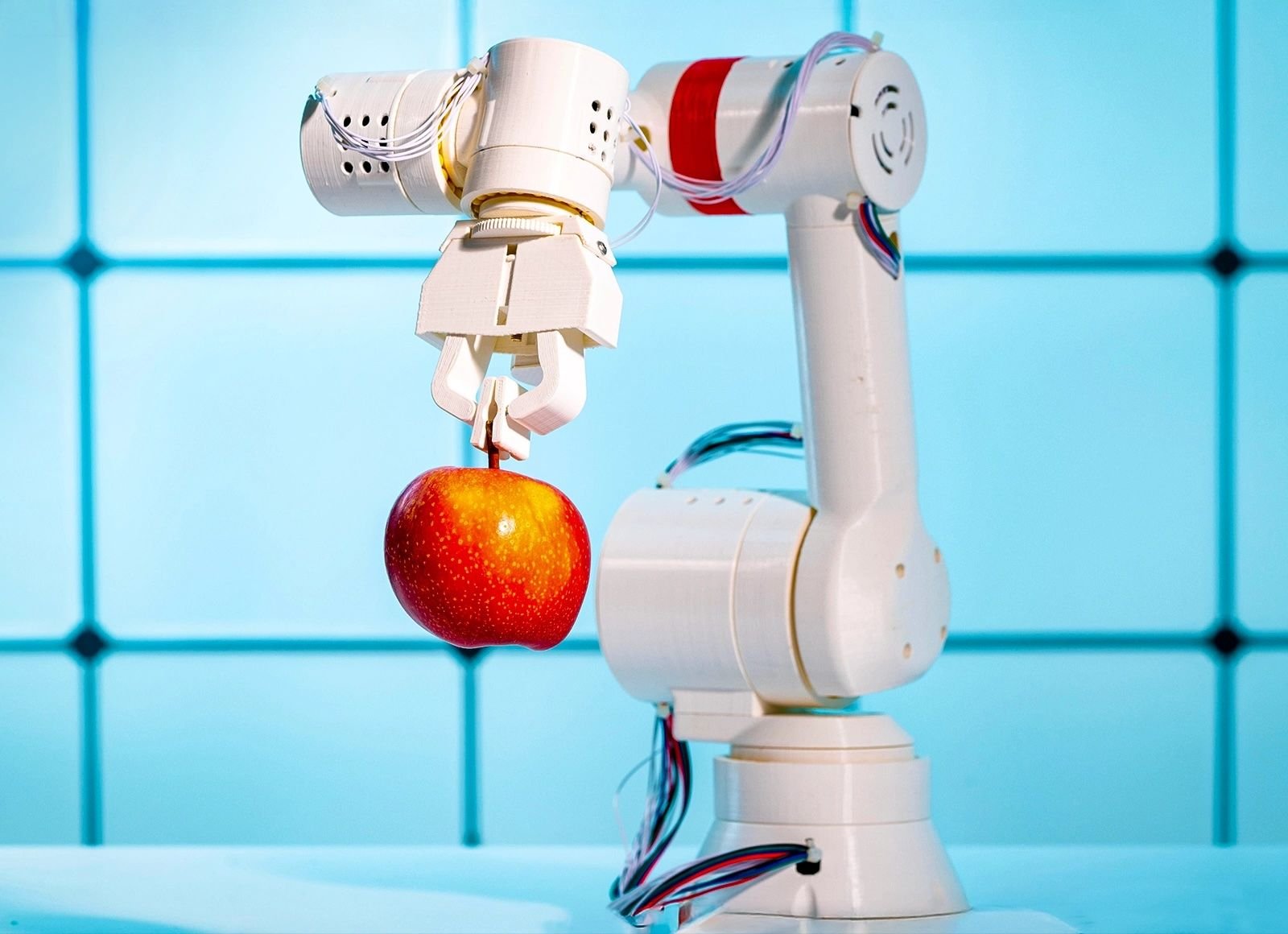

A promising avenue lies within robotics: fine-tuning segments of this model using approximately sixty hours’ worth of robotic operation data enables robots equipped with V-JEPA-derived predictors to intelligently plan subsequent actions based on learned environmental dynamics without requiring exhaustive retraining whenever tasks change significantly.

Navigating Ambiguity: The next Challenge for Intuitive AI Systems

“While current architectures excel at uncovering patterns underlying observed phenomena,” experts note “they lack explicit mechanisms encoding uncertainty levels associated with predictions-an essential factor for real-world decision-making under ambiguous conditions.”

This gap underscores ongoing hurdles toward fully replicating human-like cognition where confidence assessments guide adaptive behaviors amid incomplete information scenarios encountered daily outside controlled laboratory settings.

V-JEPA marks a meaningful advancement toward equipping machines with genuine physical intuition derived purely from raw sensory inputs. Its success highlights promising pathways bridging developmental psychology insights and state-of-the-art machine learning techniques-ushering smarter autonomous systems capable of safer navigation,

more natural interactions,

and deeper comprehension

of their surroundings akin

to innate faculties found in living beings.